Unlocking On-Device AI with Gemini Nano and the Future of Private Intelligence

We recently announced NimbleEdge AI - a privacy-first, always-on on-device AI assistant that operates entirely on smartphones. With zero reliance on the cloud and ultra-low latency, it delivers fast, secure and contextually aware intelligence powered by the NimbleEdge on-device AI platform. By ensuring that user data never leaves the device and optimizing models for real-time inference, we are reimagining the mobile AI experience.

Today, we are pleased to share a significant advancement: seamless integration with Google’s Gemini Nano, ushering in a new era of mobile large language model (LLM) deployment.

Thanks to newly available Gemini Nano capabilities on select Android devices, the NimbleEdge AI Assistant can now leverage system-integrated LLMs directly via the operating system. This eliminates the need for model downloads or increased application size, enabling truly efficient, scalable, and high-performance on-device intelligence.

Gemini Nano is a system-level on-device LLM, accessible through Google’s AICore system service. It manages model updates, safety filtering, and inference acceleration via native hardware. Through our integration, developers using the NimbleEdge platform can:

This capability is currently supported on Pixel 9 series devices and will expand with future hardware iterations.

No Downloads. No Trade-offs in Android LLM Deployment

Historically, deploying on-device LLMs required bundling models with the application or downloading them upon initial launch. This increased app size, degraded user experience, and added architectural complexity.

With Gemini Nano, these trade-offs are eliminated. Developers now benefit from reduced storage overhead, zero user friction, and a significant boost in efficiency. This represents a paradigm shift for building private AI applications, enabling real-time natural language processing and on-device generative AI capabilities.

To begin integrating Gemini Nano with NimbleEdge:

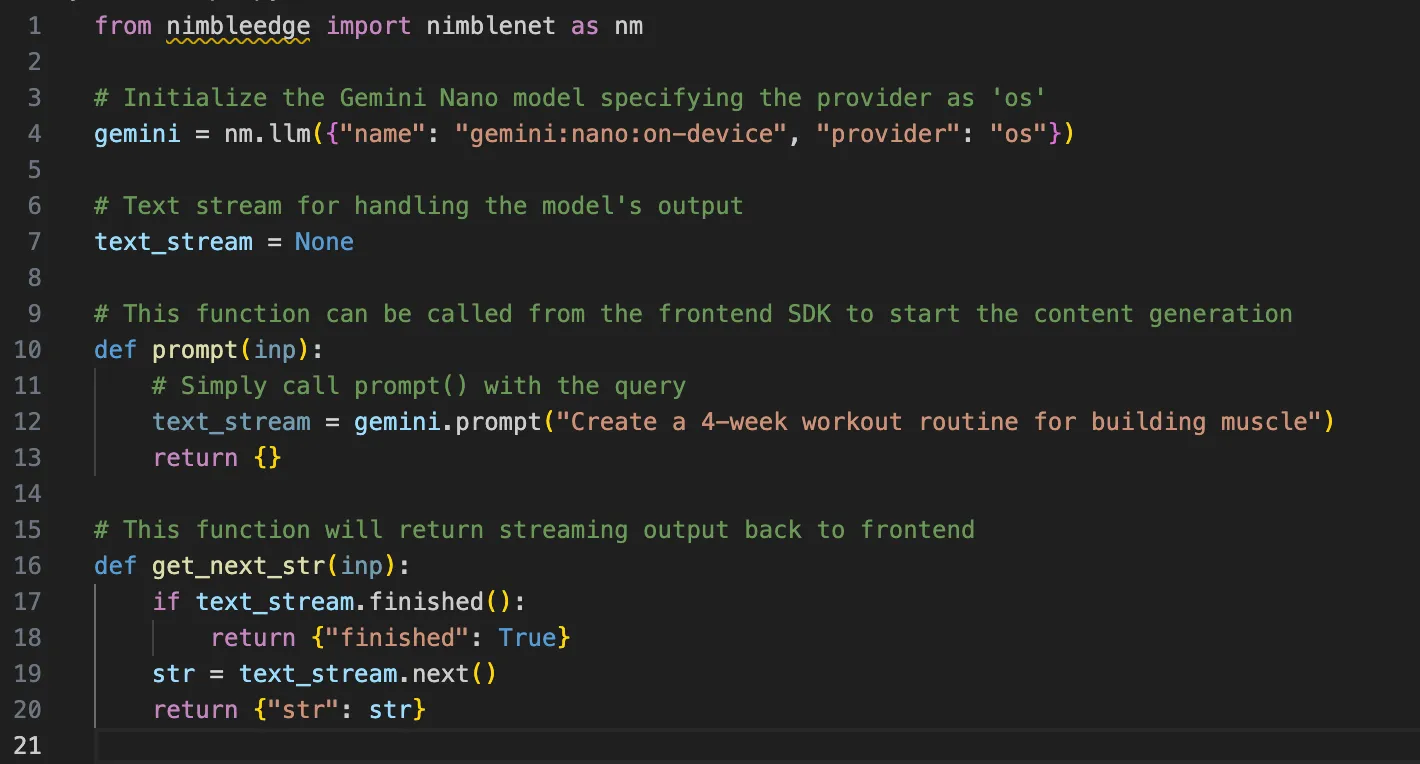

After setup, developers can create Python Workflow Scripts within the NimbleEdge SDK to initiate Gemini Nano LLM inference and stream results to their application interface.

Using Gemini Nano in the NimbleEdge platform is super easy through our on-device Python Workflow Scripts which allows you to write LLM execution and post-processing logic directly in Python. Read more about them here.

With minimal code, applications can deliver robust on-device language model performance, entirely independent of the cloud.

The integration of Gemini Nano represents a key milestone in the advancement of on-device AI, mobile LLM applications, and privacy-preserving infrastructure. Industry leaders are converging on this approach:

The shift is unmistakable: AI is moving from the cloud to the device.

This change is more than a technical optimization—it redefines the user-AI relationship:

At NimbleEdge, we view this industry transition as fundamental to the next generation of AI - private, adaptive, and always-available intelligence. We are developing a comprehensive, developer-centric ecosystem for high-performance AI workflows executed entirely on-device.

Our platform enables:

In the coming months, we will roll out:

Our mission is to empower developers to build powerful, efficient, and trustworthy AI applications by reimagining intelligence on-device.

The era of monolithic, cloud-dependent AI is coming to a close. The next generation of intelligent systems will be decentralized, efficient, and embedded seamlessly into everyday devices.

As Gemini Nano and NimbleEdge converge, we are ushering in a new standard for AI: always available, always local, and always private.

If you are building mobile experiences or AI-enabled apps, we welcome you to explore what’s possible with NimbleEdge.

Register now to access NimbleEdge AI with Gemini Nano support.

We would love to hear from you - email us your thoughts at team-ai@nimbleedgehq.ai or join our Discord.

The introduction of Large Language Models (LLMs) and Generative AI (GenAI) has been a major milestone in the field of AI. With AI models encapsulating vast amounts of world knowledge, their ability to reason and understand human language has unlocked unprecedented possibilities

The New Stack: Rethinking AI for Mobile

Ever felt Ever felt like JNI was more of a puzzle box than a useful tool? Fear not—today we’re diving deep into JNI without the headaches! 🚀