The State of On-Device AI: What’s Missing in Today’s Landscape

On-Device AI: Poised for Scale, Yet Fundamentally Underserved

The global AI market was USD 233.46 bn in 2024, projected to reach USD 1,771.62 bn by 2032 at a CAGR of 29.2%, according to Fortune Business Insights. As the demand for faster, more secure, and context-aware inferencing intensifies, on-device AI is emerging as a critical paradigm for real-time, privacy-sensitive applications across sectors.

Despite promising momentum, significant gaps remain specially in developer tooling, privacy infrastructure, and deployment scalability. These limitations hinder the widespread adoption of on-device AI, even as the underlying hardware becomes increasingly capable.

On-device AI refers to the execution of Machine Learning (ML) and Artificial Intelligence (AI) models directly on end-user devices - ranging from smartphones and wearables to industrial systems - without routing data through remote servers. This architecture offers several inherent advantages:

Reduced latency, enabling real-time decision-making and personalization

Offline functionality, ensuring availability regardless of connectivity

Data locality, which strengthens privacy and regulatory compliance

Lower operational costs, by minimizing cloud dependency

Several use cases already leverage on-device AI at scale:

Smartphones executing voice commands, object detection, and summarization entirely on-device

Wearables analyzing biometric signals for health diagnostics

Home automation devices performing local audio and visual inference for personalization and security

Enterprise devices applying lightweight computer vision models for safety and monitoring tasks

However, widespread adoption remains constrained by persistent limitations across the AI lifecycle, from model development to production deployment.

On-device AI is often associated with enhanced privacy, given that user data does not need to be transmitted to the cloud. While this offers a baseline advantage, true privacy requires deeper architectural safeguards.

Privacy must be actively and continuously designed across the model lifecycle beginning from training and distribution to personalization. Without end-to-end safeguards, the privacy promise of edge AI remains incomplete.

Developing for on-device AI currently requires navigating a fragmented and low-level ecosystem:

Multiple, incompatible SDKs (e.g., Core ML, LiteRT, ONNX Runtime) with varying operator support and performance characteristics

Limited cross-platform abstractions, forcing developers to tailor implementations for each hardware target

Sparse observability tools, with inadequate debugging and profiling support at runtime

The lack of consistent, high-level tooling significantly increases development time and operational overhead, limiting innovation and experimentation.

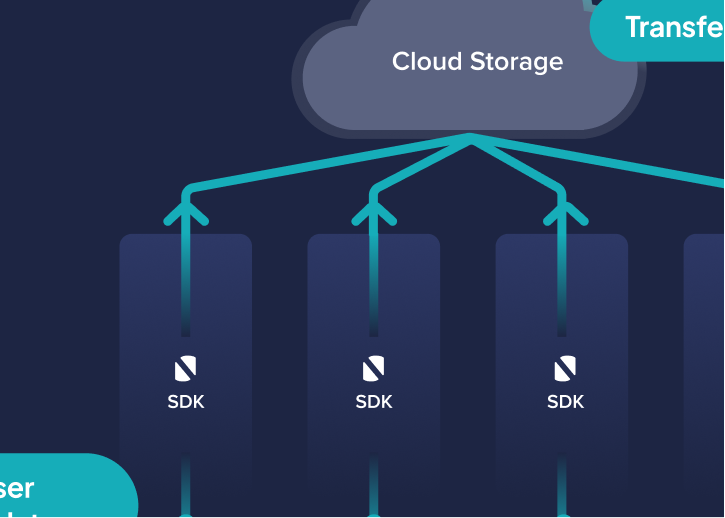

Unlike cloud AI, which benefits from established CI/CD pipelines and robust DevOps practices built over the last decade, on-device AI lacks standardized infrastructure for:

The operational complexity of deploying, maintaining, and scaling AI models across millions of heterogeneous devices across a diverse pool of CPUs/GPUs/NPUs coupled with OS versions and hardware manufacturers is a critical unsolved problem and one that must be addressed if on-device AI is to become mainstream.

Several macro trends are converging to create a pivotal moment for on-device AI:

Modern devices are increasingly equipped with high-performance neural processing units (NPUs) capable of 15–20 TOPS (trillions of operations per second). This enables on-device execution of complex models, including vision transformers, multimodal fusion pipelines, and compact language models. The computational foundation exists but the ecosystem must catch up.

Governments and regulators worldwide are placing stronger emphasis on data sovereignty and user control, with legislation such as:

On-device AI aligns well with these requirements by minimizing unnecessary data transfers and bringing ownership of data back to the users. On-device AI ensures data fair-use, adapts to sovereignty and geo-fencing regulations, while enabling businesses to bring forth personalized experiences for their users.

Working with regulatory institutions like UN and ISO we have realized regulations adapt as technology evolves, putting a need for continuously improving systems and preparing the infrastructure in advance. On-device AI is one such pillar to make AI infrastructure future-proof. Building AI systems that are not just performant but privacy-resilient by design will be a competitive advantage.

Critical use cases across industries such as automobiles, industrial automation, predictive maintenance, healthcare diagnostics, and augmented reality require sub-100ms latency and constant uptime. Cloud connectivity is neither fast enough nor reliable enough for such tasks. On-device AI offers a robust alternative, provided that deployment and monitoring challenges are addressed.

Apple, at WWDC 2025, introduced its Foundational Model framework, tightly integrated with iOS to enable private, low-latency inference across devices.

Google, during its I/O 2025 event, launched the Edge Gallery app and the Gemma 3n model, designed specifically for efficient on-device performance.

Microsoft announced Foundry Local, its new framework for running transformer models locally with enterprise-grade control.

Alibaba released its Qwen3 family, with compact models like Qwen3-0.6B that demonstrate strong reasoning and tool-calling abilities - optimized for low-power hardware.

NVIDIA Research has been doubling down on Small Language Models (SLMs), identifying them as key enablers of scalable, real-time AI at the edge.

These developments signal a clear shift toward making on-device AI more viable and performant.

Yet, despite these advancements, developer adoption and real-world integration into the app ecosystem remain limited. The core gaps lie in inconsistent tooling, lack of standardized deployment workflows, and the steep learning curve involved in aligning models with device constraints - both compute and compliance. Bridging this last mile remains critical to move from proof-of-concept to production-scale use.

On-device presents one of the largest untapped frontiers in AI infrastructure. While the hardware has matured rapidly—thanks to advances in mobile chipsets, NPUs, and edge accelerators—the software stack remains significantly underdeveloped. What’s needed is a purpose-built platform that spans the entire lifecycle: from model optimization and deployment, to runtime orchestration, security, monitoring, and integration.

Equally important is the emergence of a marketplace for lightweight AI agents - modular, task-specific models or workflows that can be easily deployed, combined and adapted across devices. This would empower developers to build rich, intelligent experiences without having to reinvent the wheel for every application or edge use case.

Model optimization for real-world device constraints (e.g., quantization, pruning, sparsity)

Privacy-first design at every layer from model inference to user data ingestion across apps

Unified tooling for development, validation, deployment, and monitoring

Scalable pipelines for model delivery and lifecycle management

At NimbleEdge, we are building the missing infrastructure for on-device AI. Our mission is to enable developers and enterprises to:

Build and deploy advanced models locally without compromising on performance or privacy

Navigate the complexity of heterogeneous hardware through intuitive tooling and familiar APIs driven via Python workflows on-device

Manage AI models and agentic workflows at scale with production-grade deployment and observability solutions

We envision a future where intelligence is not limited by connectivity or cloud access but embedded directly into the device, application, and context where it’s needed most.

This article marks the beginning of a multi-part series exploring the technical and operational landscape of on-device AI. Upcoming posts will delve into:

Advanced optimization techniques for local inference

Privacy-preserving strategies for on-device personalization

Scalable model deployment frameworks across diverse hardware

Case studies, benchmarks, and open research challenges

If you are building the next generation of intelligent, responsive, and privacy-conscious applications, we invite you to follow along.

Register to access NimbeEdge AI - https://www.nimbleedge.com/nimbleedge-ai-early-access-sign-up

Join our Discord community - https://discord.gg/y8WkMncstk

The World’s First Open-Source, On-Device Agentic AI Platform

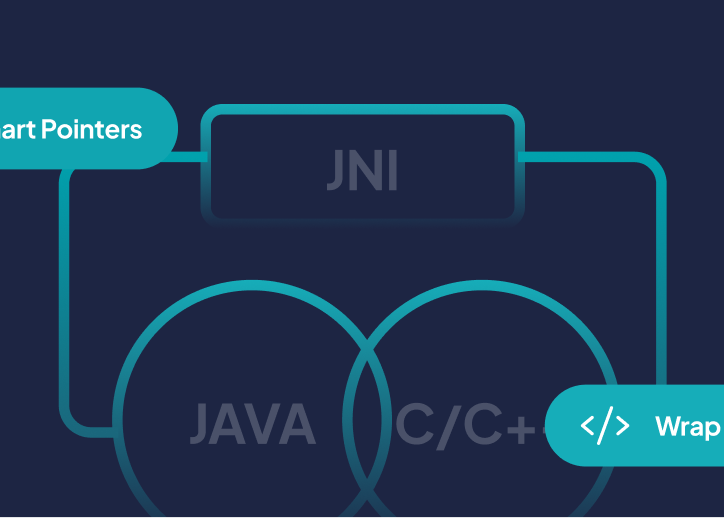

Ever felt Ever felt like JNI was more of a puzzle box than a useful tool? Fear not—today we’re diving deep into JNI without the headaches! 🚀

Mobile apps across verticals today offer a staggering variety of choices - thousands of titles on OTT apps, hundreds of restaurants on food delivery apps, and dozens of hotels on travel booking apps. While this abundance should be a source of great convenience for users, the width of assortments itself is driving significant choice paralysis for users, contributing to low conversion and high customer churn for apps.