The Mobile-First AI Movement is Just Starting

By 2026, over 70% of smartphones will have dedicated on-device AI capabilities, up from just 15% in 2022 [1]. This rapid shift isn’t just about faster processors or edge compute, it signals the dawn of a new era: the mobile-first AI movement.

While AI development has largely been cloud-centric, we’re now seeing a fundamental paradigm shift. The center of gravity is moving from cloud data centers to the very devices in your hand. At NimbleEdge, we believe this transition is not only inevitable, it’s essential for the next generation of personal, private and intelligent applications.

Today’s smartphones are powerful. Flagship chips like Apple’s A17 Pro and Qualcomm’s Snapdragon X Elite feature dedicated neural processing units (NPUs) capable of billions of operations per second. But raw performance is only one piece of the puzzle.

Mobile-first AI isn’t just about running AI models on your phone. It’s about rethinking how we design, deploy and optimize AI experiences, from the ground up, for mobile environments.

This movement matters because it solves three critical limitations of cloud-based AI:

Latency - On-device AI delivers real-time results without relying on a round trip to a data center. Whether it’s voice assistants, camera enhancements or personal automation, near-zero latency changes the user experience entirely.

Privacy - Sensitive data never leaves the device. From personal health data to behavioral insights, on-device processing ensures user-first privacy, eliminating the need to share raw data with external servers.

Availability - No network? No problem. Offline access to intelligent features ensures continuity, whether you’re in a subway or on a plane. This unlocks a truly global and reliable AI experience.

Cost - Cloud inference costs scale rapidly with usage. On-device AI drastically reduces operational expenses by eliminating per-inference cloud costs—making it not only scalable but economically viable.

Most AI stacks today are bloated, power-hungry and optimized for server GPUs. To go mobile-first, we need a new architectural approach.

At NimbleEdge, we’re reengineering the stack across four layers:

Model Design - We leverage lightweight transformer-based models tailored for mobile environments. These are quantized, pruned, and compiled to run on-device with minimal compute overhead. We leverage model sparsity to ensure larger models can be run in a performant way with lower memory consumption.

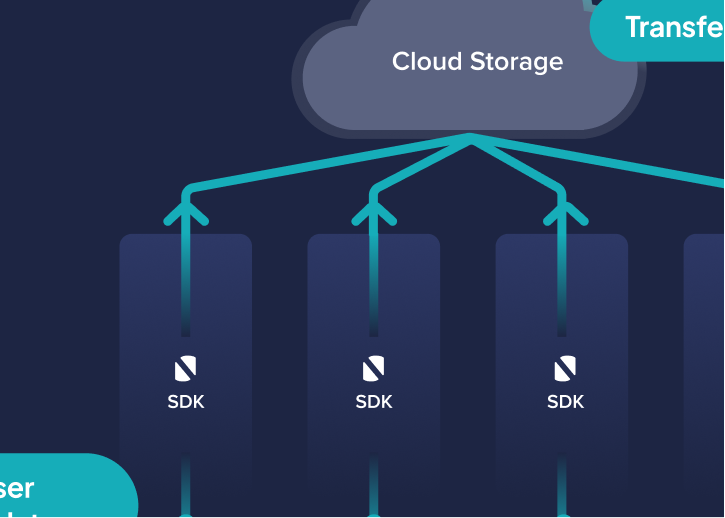

OS and Hardware Optimization - By aligning closely with iOS CoreML, Android NNAPI and chip-level SDKs (like Qualcomm’s AI Engine and Apple Neural Engine), we ensure our models run natively and efficiently.

Personalization Engine - We leverage user-generated signals (e.g., app behavior, usage patterns) to create context-aware and adaptive AI agents without ever sending raw data to the cloud

Python Layer - To enable developer agility, we’ve built a flexible Python interface that abstracts away the complexity of mobile deployment. It allows data scientists and ML engineers to prototype, test, and deploy models to mobile devices—without rewriting in native code.

Mobile-first AI is already enabling new classes of experiences:

Voice Assistants: Fully offline, personalized command execution (e.g., setting alarms, dictation) with better responsiveness.

Generative AI: Running LLMs like Llama 3 and Gemma locally for short-form summarization, translation, and even co-pilot experiences.

Vision Intelligence: Real-time, on-device object detection and scene understanding in AR/VR and camera applications.

Wellness and Productivity: AI-driven journaling, mood tracking, and habit formation that stay private by default.

While the momentum is real, mobile-first AI isn’t without its roadblocks:

Model Compression Tradeoffs: Balancing model size with inference quality is a technical art still being perfected.

Hardware Fragmentation: Varying chipsets and ML SDK support across Android devices make cross-platform deployment non-trivial.

Developer Tooling: Unlike the cloud, mobile lacks standardized, open-source frameworks that simplify on-device AI deployment.

This is where NimbleEdge steps in with an open-source, modular platform “DeliteAI” purpose-built for the next era of mobile intelligence.

Our mission is to empower developers and product teams to build privacy-first, personalized, on-device AI for real-world mobile use cases. With support for real-time inference, intuitive APIs, and model lifecycle management, all locally, we’re redefining what’s possible on your phone.

We believe the future of AI isn’t centralized - it’s distributed, personal and mobile-first.

The AI ecosystem is waking up to the reality that user expectations are shifting—from cloud-connected smarts to deeply personal, always-available intelligence. The smartphone isn’t just a delivery vehicle anymore, it’s the home of AI itself.

The movement has only just begun. The hardware is ready. The models are catching up. And with platforms like NimbleEdge, the ecosystem is about to change forever.

Let’s build the future - on-device, by default.

Check out ‘DeliteAI’ on https://github.com/NimbleEdge/deliteAI

Critical use cases served by on-device AI across industries

Mobile apps across verticals today offer a staggering variety of choices - thousands of titles on OTT apps, hundreds of restaurants on food delivery apps, and dozens of hotels on travel booking apps. While this abundance should be a source of great convenience for users, the width of assortments itself is driving significant choice paralysis for users, contributing to low conversion and high customer churn for apps.

On-Device AI models that understand your users in real-time