On-Device AI Challenge & Solutions

On-Device AI: Why It’s Both the Biggest Challenge and the Ultimate Solution for the Future of Computing

Artificial Intelligence has become synonymous with the cloud. From voice assistants to recommendation engines, most AI experiences today rely on powerful servers processing data in remote data centers. But as models get larger and consumer expectations shift toward privacy, speed and personalization, a new paradigm is emerging: on-device AI.

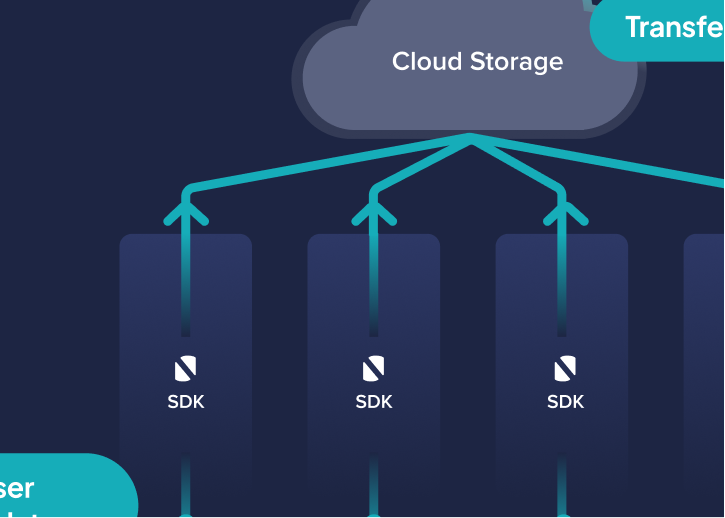

On-device AI refers to running AI models directly on smartphones, laptops, wearables or IoT devices without constant reliance on the cloud. It is, at once, a technological challenge and a transformative solution.

Building AI that runs natively on devices requires overcoming constraints that cloud-based systems don’t face:

Despite these challenges, the benefits of on-device AI make it an inevitable future.

Privacy and Security by Design

Latency and Reliability

Cost and Sustainability

Personalization at Scale

Tech giants and startups alike are investing heavily in this shift:

This ecosystem shows that the future of AI is not just smarter models but smarter deployment strategies as well.

At NimbleEdge, we believe the future of AI lies in mobile-first intelligence. Our platform makes it possible for developers to build applications that:

By removing the cloud as a bottleneck, we enable enterprises and developers to reimagine what AI can do; whether it’s personalized education apps, healthcare monitoring or productivity assistants.

On-device AI today feels like cloud computing in the early 2000s: full of technical hurdles but inevitable in its trajectory. As hardware accelerators evolve, model optimization improves and developer ecosystems mature, on-device AI will become the default, not the exception.

What started as a constraint with limited compute and energy will soon be reframed as an advantage: AI that is private, responsive, cost-effective and personalized.

For organizations, this means now is the time to invest, experiment and prepare. The companies that adopt on-device AI today will define the user experiences of tomorrow.

Join our Discord community to learn more about how NimbleEdge is advancing the on-device AI ecosystem.

It is a valid question (isn’t it?) that why should we put effort into reducing the size of an SDK, with mobile storage capacities increasing all the time. Surely, how much do a few MBs matter when the device has multiple hundred gigabytes of sto

In our previous blog, we covered how NimbleEdge helps capture event streams

Mobile apps across verticals today offer a staggering variety of choices - thousands of titles on OTT apps, hundreds of restaurants on food delivery apps, and dozens of hotels on travel booking apps. While this abundance should be a source of great convenience for users, the width of assortments itself is driving significant choice paralysis for users, contributing to low conversion and high customer churn for apps.